Our team at Lumia took a deep dive into the ChatGPT macOS desktop app’s certificate pinning mechanism – and uncovered something surprising. ChatGPT maintains a certificate pinning whitelist… and we managed to identify most of those certificates. Their identities may surprise you. For the first time ever, public insights are now available on OpenAI’s pinning strategy.

[Update – November 20, 2025]

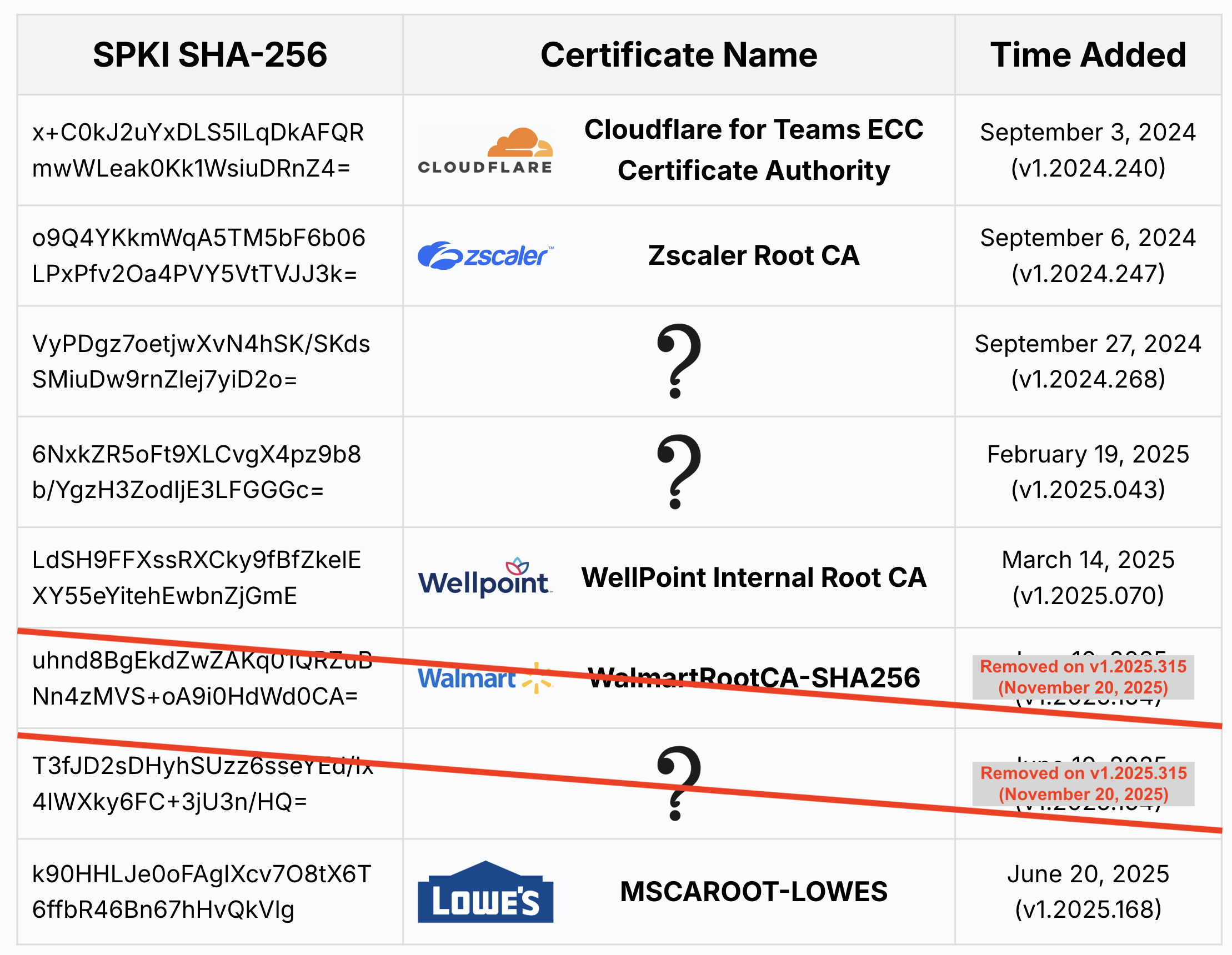

Version 1.2025.315 of the ChatGPT macOS app was released on November 20, 2025, and includes a major cleanup of the whitelisted certificates. The list has been reduced from 48 certificates to 35. This is the first time in five months that OpenAI has updated the list.

Walmart's Root CA, which was added to the allowlist on June 10, 2025 (v1.2025.154), is no longer included. An unidentified certificate, added on the same date, has also been removed.

The remaining removed certificates are public CAs that are no longer trusted by common certificate stores.

To summarize: as of today, the ChatGPT macOS app allowlists six non-standard certificates used for SSL inspection:

- Cloudflare's (expired) certificate

- Zscaler's ZIA root CA

- Wellpoint Internal Root CA

- Lowe's Root CA

- Unidentifed certificate #1

- Unidentifed certificate #2

Introduction

Nowadays, it’s hard to find anyone who hasn’t interacted with ChatGPT. Thanks to its convenience and versatility, many users choose to engage through OpenAI’s dedicated apps – whether on their phones or computers. Given the sensitive nature of conversations people have with ChatGPT, OpenAI’s user and enterprise privacy is of utmost importance. To explore this, we analyzed ChatGPT’s Mac desktop application. What we found raises important questions about fairness and the ability of smaller organizations to protect themselves.

Certificate Pinning: a Double-Edged Sword

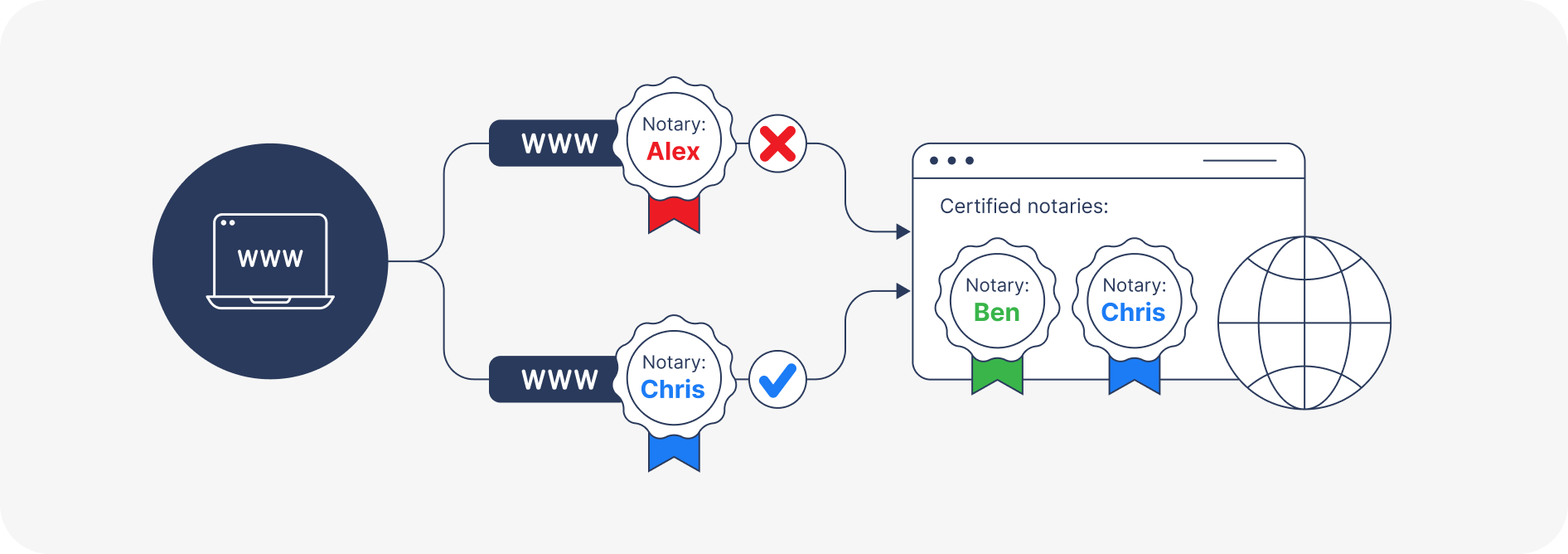

Normally, a client validates the server by checking that its SSL/TLS certificate was issued by a trusted certificate authority. With certificate pinning (Figure 1), the client further restricts which certificates are accepted, typically to a specific known certificate or issuer. Certificate pinning makes man-in-the-middle attacks impossible – even for research purposes or by an external adversary – by preventing the use of a locally added trusted certificate, for example.

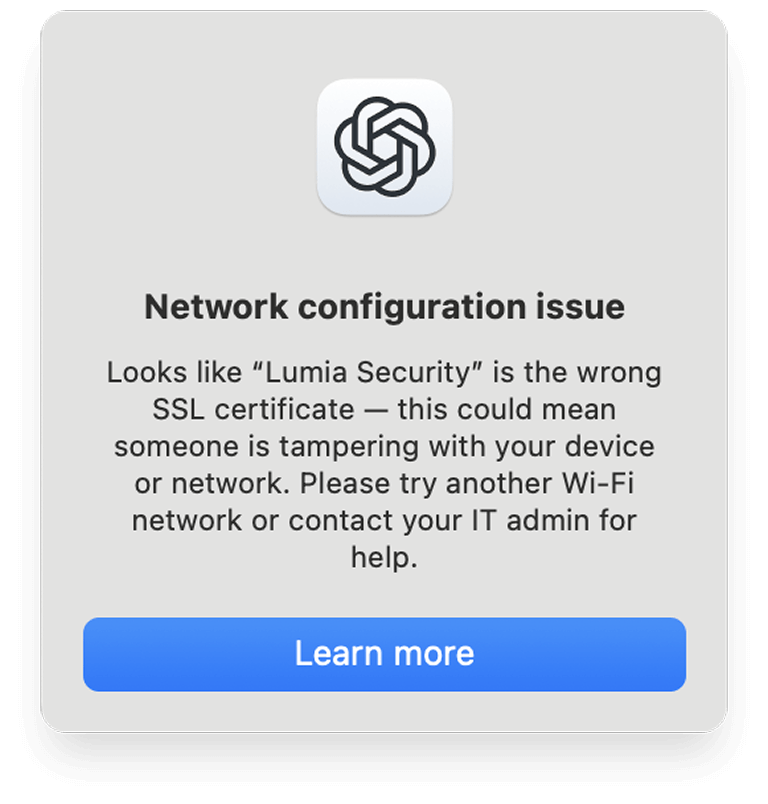

The macOS version of the ChatGPT app implements an SSL/TLS certificate pinning mechanism to prevent third parties from peeking into its communications. This means that if the ChatGPT app detects a root certificate that is not in its list of allowed issuers, it displays a message and will not start (see Figure 2).

While certificate pinning is a powerful technique for strengthening the TLS trust model, it doesn’t just block malicious actors – it also prevents organizations from inspecting outbound traffic. Reason being that they cannot insert a custom certificate to decrypt TLS traffic.

This presents a significant challenge for companies focused on preventing data leakage since security teams typically rely on SSL inspection – a capability built into most Secure Web Gateways (SWGs), next-generation firewalls, and other security solutions – to monitor and detect sensitive information leaving the organization.

When certificate pinning is in place, SSL inspection becomes impractical, limiting the organization’s visibility and undermining the ability to enforce its security policies effectively.

Uncovering ChatGPT's Trusted Certificates

Though not documented, we recently uncovered how ChatGPT’s certificate pinning mechanism works.

Buried within the macOS ChatGPT binary, we found a list of trusted root certificates that ChatGPT uses to authenticate servers. Specifically, the list consists of the hash of each certificate’s public key (SPKI). Fingerprinting a certificate by its key information is a common and effective practice, as it allows the certificate’s validity to be extended without changing its identifier.

The trusted root certificate list contained the standard root certificates – those you’d expect to find in your Windows or macOS trust stores (like DigiCert, LetsEncrypt and GlobalSign) which are obvious to identify. In addition to the standard certificates, ChatGPT’s whitelist contains six additional certificates that do not typically appear in standard certificate stores. Because the list contains only the SPKI hashes, identifying those allowed certificates is challenging.

However, Lumia Researchers were able to identify half of these certificates, and are still searching for the rest (see Table 3). We have been tracking these changes over time, and the latest app version as of the time of writing this blog is 1.2025.315.

Cloudflare ZTNA

The first non-standard root certificate was added to the ChatGPT macOS allowlist on September 3, 2024 (app version 1.2024.240). We identified it as belonging to Cloudflare’s ZTNA solution, likely added to enable SSL inspection of ChatGPT traffic by Cloudflare. However, this certificate expired on February 2, 2025 and was not replaced (expect a blog post dealing with this soon.).

As a result, Cloudflare’s ZTNA can no longer perform SSL inspection on ChatGPT for macOS.

Zscaler Internet Access

Three days later, on September 6, 2024, another version of ChatGPT for macOS was released (version 1.2024.247). This version added a new certificate to the list – this time we were able to identify it as belonging to Zscaler and used for SSL inspection in Zscaler Internet Access. Thanks to this inclusion in the ChatGPT mac allowlist, and as far as we can tell, Zscaler is currently the only Secure Web Gateway solution that enables SSL interception of ChatGPT traffic on macOS.

By integrating with various SWG solutions, including Zscaler, Lumia is able to provide visibility into encrypted ChatGPT traffic on macOS as well.

Enterprise owned Certificates

On March 14, 2025 (version 1.2025.070) we first observed that OpenAI has added several enterprise-owned, not vendor, certificates to the list of those allowed for ChatGPT macOS.

Our working assumption is that OpenAI adds enterprise-owned certificates to allow individual organizations, using their own root CAs, to perform SSL inspection on encrypted ChatGPT traffic on macOS.

WellPoint

We were able to identify the certificate belonging to WellPoint (“WellPoint Internal Root CA”). It was added on March 14, 2025 (version 1.2025.070).

Fingerprint (SHA256):

a85ceec1530253211d11cf31ce05c23b2d8cab80905fa571049566a62e95a969

SPKI (SHA256):

2dd487f45157b2c4570a4cbd7c17d991e9445d8e797988ad7a11306e76631a61

Walmart

Three months later, June 10, 2025 (version 1.2025.154), Walmart’s root certificate (“WalmartRootCA-SHA256”) was added.

However, for a reason unknown to us, the certificate was removed from the list five months later, on November 20, 2025 (version 1.2025.315).

Fingerprint (SHA256):

a85ceec1530253211d11cf31ce05c23b2d8cab80905fa571049566a62e95a969

SPKI (SHA256):

d416fe1b6ae36f168ab5d55433050b4909bb93ae6f2ad328f3af68d5a3b78d40

Lowe's

Lowe’s certificate (“MSCAROOT-LOWES”), was added to the SSL inspection allow list on June 20, 2025 (version 1.2025.168).

The certificate's SHA256 fingerprint is: .

Fingerprint (SHA256):

57e2da474242a0d0c18bf9777c2eb9d9fff1b6901d7210a59e53a26671d77a2a

SPKI (SHA256):

93dd071cb25ed281408085dcbfb3bcb57e93e9f7db478e819faee11ef4245658

Two Unidentified Certificates

Two certificates from the allowlist (marked as ? in the table above) remain unidentified, even after a thorough search across various certificate transparency logs. We assume these certificates also belong to individual enterprises and were added through a mechanism similar to that used for the enterprise-owned certificates that we were able to identify.

If you manage to identify any of them, feel free to drop us a note at bobi@lumia.security.

Another unidentified certificate, which is no longer included, was added on June 10, 2025 (version v.12025.154), together with the Walmart Root CA. Both certificate were remove on November 20, 2025 (version 1.2025.315). This suggests that both may have belonged to Walmart, but we were not able to confirm it.

Unidentified certificate #1 SPKI (SHA256):

5723c3833ee87ad8f05ef3788522bf48a76c48c8ae0f0f6b9d995e8fbca20f6a

Unidentified certificate #2 SPKI (SHA256):

e8dc64651e6816df572c2be05f8a73f5bf1bfd88331f76687488c4dcb1461867

Unidentified certificate #3 SPKI (SHA256) [Removed from the list on November 20, 2025; Walmart's?]:

4f77c90f6b031f2852533cfab2c79811dfc8c789565e4cba142fb78d4de7fc74

Bottom Line: Certificate Pinning Hurts Organizations

This is not a vulnerability or security issue – we haven’t found any way for an attacker to exploit OpenAI’s pinning exemption mechanism to gain access or bypass validation.

As demonstrated, however, certificate pinning is a poor practice, especially in environments where data leakage is a concern and monitoring or governance is necessary. Furthermore, allowing certificate pinning exceptions for large enterprises while offering no option for smaller organizations to monitor traffic is unfair and widens the privacy gap. OpenAI should either remove certificate pinning from the native Mac app entirely or avoid allowing exceptions of any kind. Even the Compliance API – OpenAI’s alternative monitoring mechanism – is limited to the costly Enterprise tier, accessible only to large organizations.

%20(1).png)